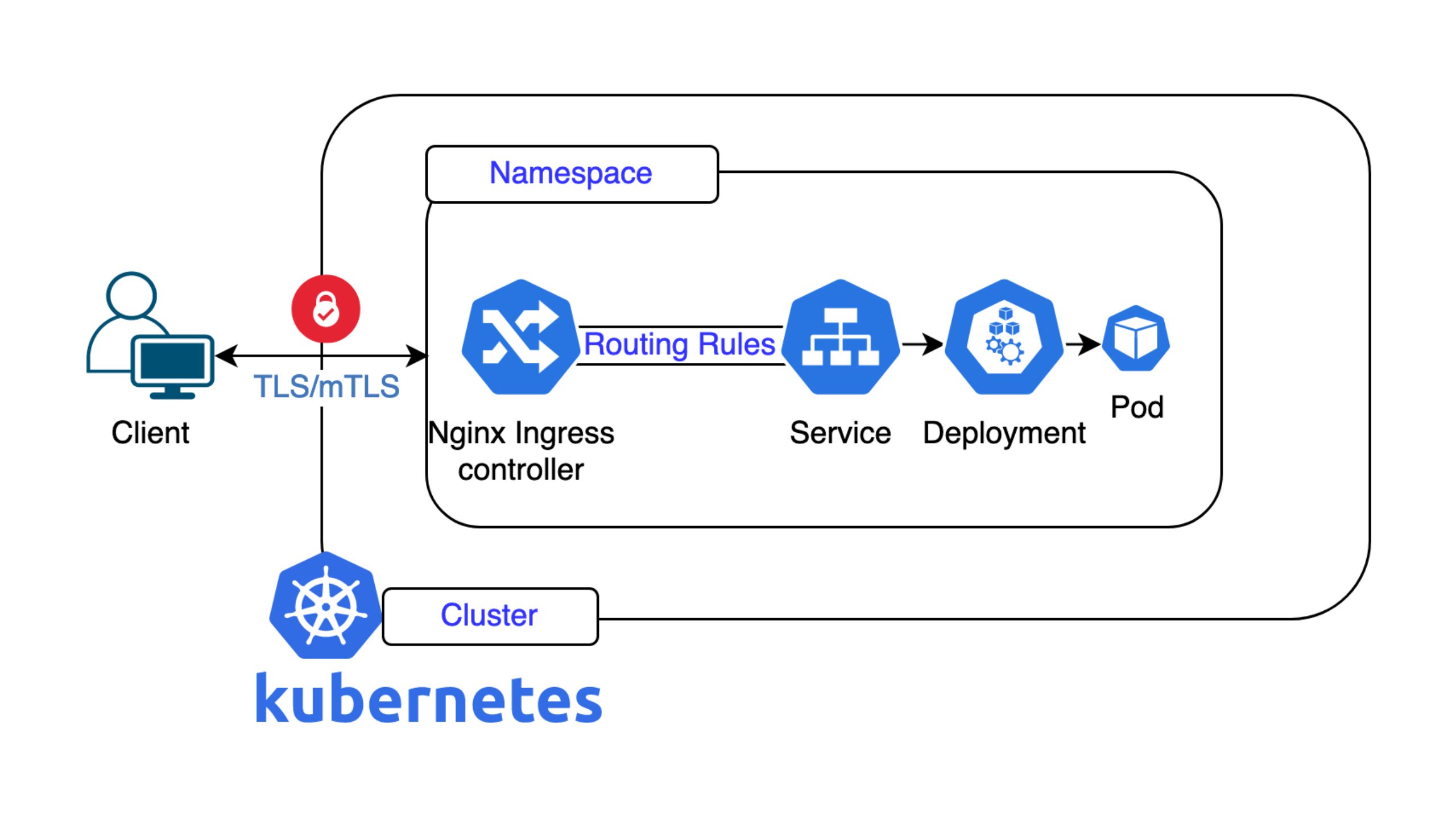

Virtualization, containers and cloud computing have fundamentally changed the development and operation of modern applications. However, if you have to manage and provide a large number of containers, there is no way around container orchestration, because countless processes have to be managed simultaneously and in a resource-optimized manner. Tools such as the open source-based Kubernetes provide powerful solutions for orchestrating a container-based environment.

In the following article, we explain what container orchestration is, what it is used for, and how it works. We go into detail about Container Orchestration with Kubernetes and introduce the nine Managed Google Kubernetes Engine (GKE). Some use cases will conclude by illustrating the usefulness of container orchestration.

Definition of the container orchestration

Container Orchestration is the automation of the processes for provisioning, organizing, managing, and scaling containers. It creates a dynamic system that groups many different containers, manages their interconnections, and ensures their availability. Container Orchestration can be used in different environments. It can manage containers in a private or public cloud or on-premises equipment.

Container orchestration details

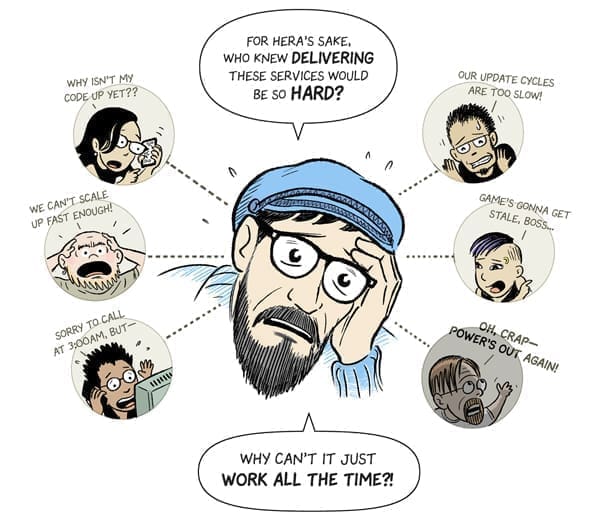

Container orchestration is closely linked to cloud computing and the delivery of applications in the form of many individual microservices. But what is Container Orchestration needed for and how does it work?

What is container orchestration needed for?

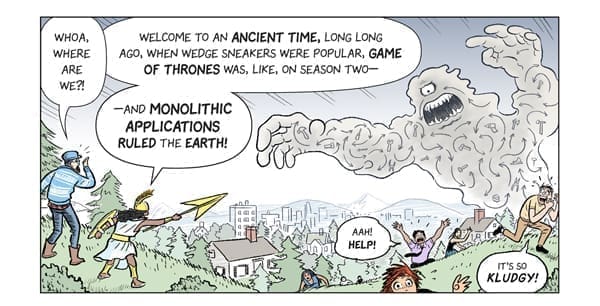

Applications that were developed without containers in mind, are often referred to as Classical or Monolithic applications. All functions, classes and sometimes services, were included in to a single source repository with many internal dependencies:

Source: https://cloud.google.com/kubernetes-engine/kubernetes-comic/assets/panel-8.png, art and story from Scott McCloud

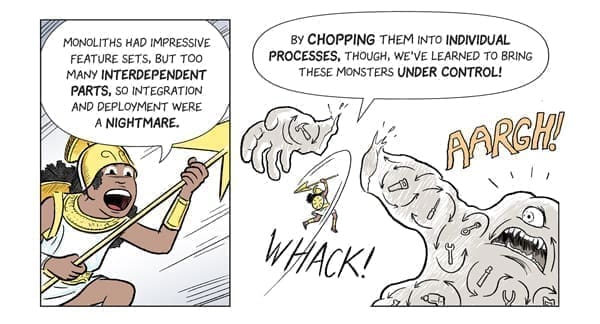

While these “application monsters” have an impressive range of functions, they are difficult to deploy, maintain, and scale. These applications cannot keep pace with the ever-faster processes of digital transformation.

Source: https://cloud.google.com/kubernetes-engine/kubernetes-comic/assets/panel-3.png, art and story from Scott McCloud

The current trend is moving towards microservices, which allows for an IT team to “divide and conquer” large problems into small tasks. Applications consist of many small, independent services with individual tasks. The microservices communicate with each other via defined interfaces and as a whole form the actual application.

Source: https://cloud.google.com/kubernetes-engine/kubernetes-comic/assets/panel-9.png, art and story from Scott McCloud

Each microservice can be provided, operated, debugged, and updated individually without affecting the operation of the overall application. Microservices are a decisive step towards agile applications and the DevOps concept.

Source: https://cloud.google.com/kubernetes-engine/kubernetes-comic/assets/panel-10.png, art and story from Scott McCloud

Existing services are divided up into containers or a group of containers. The containers provide a kind of virtualized environment and have the complete runtime environment including libraries, configurations, and dependencies. When compared to full virtual machines, containers require fewer resources and can be started much faster. Many containers can be run in parallel on a single physical or virtual server. Together with the containers, the microservices can be moved easily and quickly between different environments. Containerized microservices form the basis for cloud-native applications.

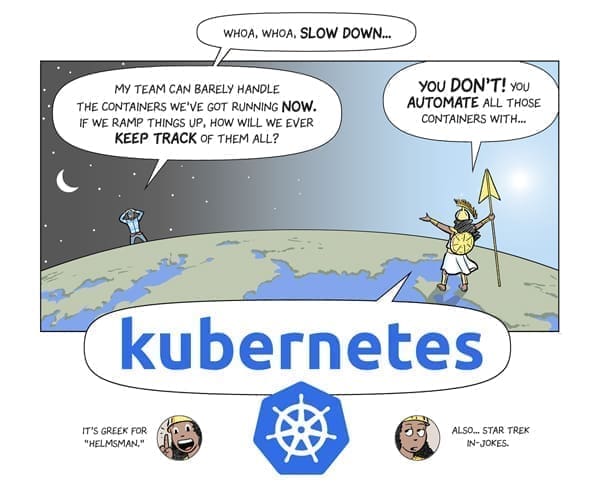

Complex applications consist of many microservices and containers that are operated on different systems and in different environments. The manual management of a large number of containers is a challenge for every administrator. A solution to this problem is container orchestration, as is possible with Kubernetes, for example. It automates the processes of deployment, organization, management, and scaling of containers.

Source: https://cloud.google.com/kubernetes-engine/kubernetes-comic/assets/panel-20.png, art and story from Scott McCloud

Which functions does the container orchestration perform?

Container Orchestration offers the user the possibility to control, coordinate and automate all processes around the many individual containers. Container Orchestration performs the following tasks, among others:

- Provision of the containers

- Configuring the containers

- Allocating resources

- Grouping the containers

- Starting and stopping the containers

- Monitoring of the container status

- Updating the containers

- Failover of individual containers

- Scaling the containers

- Ensuring the communication of the containers

The containers and their dependencies are described in configuration files. Container Orchestration uses these files to plan the deployment and operation of containers.

Container Orchestration in Kubernetes

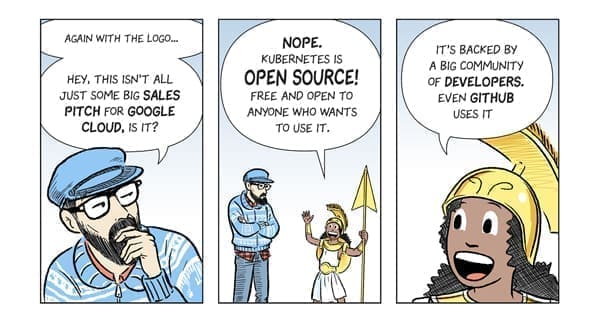

Kubernetes, often abbreviated K8s, is an open source-based solution for orchestrating containers. It was originally developed by Google and released in 2014. In 2015, Google donated the Kubernetes project to the Cloud Native Computing Foundation (CNCF). CNCF is responsible for many other projects in the Cloud Native Computing environment.

Source: https://cloud.google.com/kubernetes-engine/kubernetes-comic/assets/panel-21.png, art and story from Scott McCloud

Although it is still a young software, Kubernetes rules the ecosystem of the orchestrators. Kubernetes can control, operate and manage containers, but requires a container engine like Docker to provide the actual container runtime environment. Compared to the container orchestration with Docker Swarm, which is now integrated in Docker, Kubernetes offers a much wider range of features.

How does Kubernetes work?

Kubernetes knows the following basic elements:

- Pods

- Nodes (formerly Minions)

- Cluster

- Master Nodes

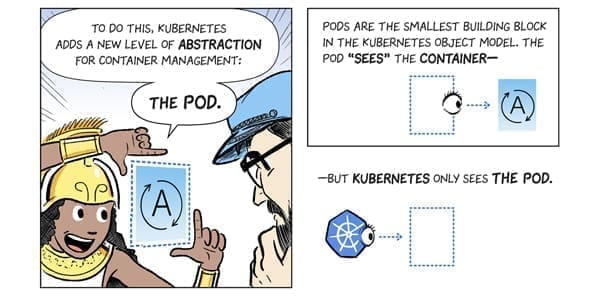

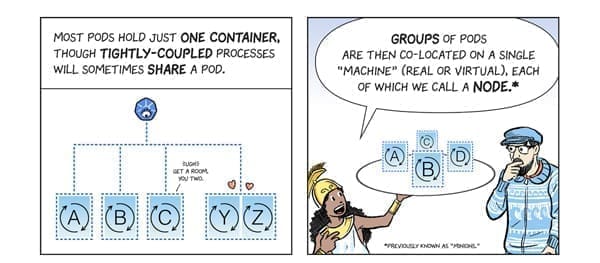

Within the Kubernetes architecture, a Pod is the smallest unit. A Pod can contain one or more containers.

Source: https://cloud.google.com/kubernetes-engine/kubernetes-comic/assets/panel-26.png, art and story from Scott McCloud

Individual Pods or groups of Pods are operated on one node. A node is a physical or virtual machine. A container runtime environment like Docker is installed on the nodes.

Source: https://cloud.google.com/kubernetes-engine/kubernetes-comic/assets/panel-27.png, art and story from Scott McCloud

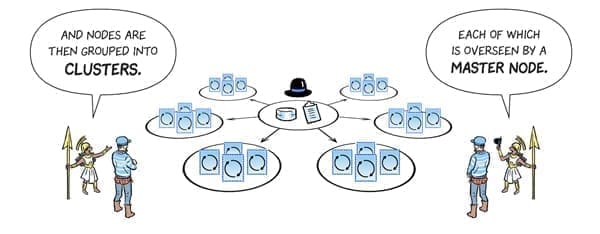

Several nodes can be combined into a cluster. Clusters consist of at least one master node and several worker nodes. The master nodes have the task of receiving commands from the administrator and controlling the worker nodes with their pods.

Source: https://cloud.google.com/kubernetes-engine/kubernetes-comic/assets/panel-28.png, art and story from Scott McCloud

The master decides which node is best suited for a particular task, determines the pods that run on the node and allocates resources. The master nodes receive regular status updates that allow the operation of the nodes to be monitored. If required, Pods with their containers can be automatically started on other nodes.

The nine Managed Google Kubernetes Engine (GKE)

Kubernetes is a powerful tool and offers a huge feature set, but also has a steep learning curve. Appropriate know-how and resources are required for container orchestration with Kubernetes. With the nine Managed Google Kubernetes Engine (GKE) you get a fully managed environment where you can deploy and orchestrate your containers directly. The operation of the platform is manged by nine.

What is the nine Managed GKE and how does it work?

The nine Managed GKE is a managed service product. It is based on the Google Kubernetes Engine (GKE), an environment to deploy, manage, and scale containerized applications on Google infrastructure. The containers run on a secure, easily scalable cluster consisting of multiple machines. Nine provides additional features to Google Kubernetes and takes over the complete operation of the cluster. Regular backups are performed, external services are integrated and storage management, monitoring, and replication tasks are performed. You can fully concentrate on the development of your applications and the orchestration of the containers on the managed platform.

All data is securely stored in Switzerland, as this is a Swiss service. At the same time, the worldwide Google infrastructure offers the possibility of global scaling.

Concrete Use Cases

If you have applications that are divided into microservices, or you want to redesign existing applications cloud-natively, you can run the containerized microservices on a cluster with nine Managed GKE. Nine takes care of the operation of the cluster. You concentrate on the development and management of the containers. The reallocated resources allow you to eliminate budget bottlenecks, catch up on backlogs in application development, or eliminate technical deficits in existing applications. Below are some use cases that illustrate the best practices of container orchestration.

Agile, dynamically growing applications of a startup

Startups with new business ideas need agile applications. Functions have to be changed, extended, or adapted on a daily basis. Once the first successes are achieved, the requirements in terms of resources and scaling increase. With modern, containerized applications, startups cover all requirements for agile, dynamically growing applications. By dividing the application into many different microservices and making them available via containers, individual functions can be changed or scaled without affecting the entire application.

Applications with high availability

Many companies depend on the high availability of their business-critical applications. Even short failures can lead to immense sales losses or a loss of reputation. Industries that have high demands on the availability of certain applications include manufacturing and finance. In a modern application consisting of containerized microservices, container orchestration takes care of the uninterrupted operation. For example, if individual computers fail, the affected containers are automatically started on other computers in a redundantly designed cloud environment. Manual intervention is not necessary. Even when updating or scaling the services, the basic operational readiness of the entire application is not affected.

Concentration on application development – no resources for operation

In most cases the financial, human, or technical resources are limited. If applications are provided on the basis of a fully managed, containerized, and cloud-based environment such as the nine Managed GKE, resources are freed up as typical operational tasks are eliminated. These resources can be used for application development or for eliminating technical shortcomings of existing applications. The company focuses more on its core business and the chances of success increase.

The nine cloud navigators are your partner for cloud-native applications and container orchestration

If you want to benefit from the advantages of cloud computing and cloud-native applications and accelerate your time-to-market, then the nine cloud navigators are the right partner for you. With the nine Managed GKE, your data is securely stored in Switzerland. At the same time, you have the possibility of the global scalability of the Google Cloud. You do not have to deal with the complexity of managing and operating a cluster yourself.

Our Kubernetes experts help you with container orchestration and provide you with a fully managed environment. We will be happy to answer your questions or introduce you to our managed cloud solution.