As you may have noticed, our v1 S3 product has officially been deprecated, and the migration to v2 is now live. In this blog post, we’ll delve into the reasons behind Nine’s decision to make this transition, how we pulled it off, and most importantly, whether it was worth the effort. So grab a coffee, get comfortable, and let’s dive into the details of this migration journey.

Why

Since its introduction on Nine’s self-service platform in mid-2021, the v1 S3 product has been plagued with challenges, and the ride has not exactly been smooth.

Our v1 S3 service represents an abstraction of a managed service obtained from an external vendor. This service package includes software, support, and dedicated standalone hardware on which the vendor operates.

From painfully slow performance in our staging environment, which made running tests an exercise in patience, to frequent outages disrupting development workflow, it was clear that something had to be changed. Unfortunately, our observations were not limited to staging.

In production, the performance was abysmal too, and the vendor’s support was unable to offer us viable solutions. Despite our investment in this product, both financially and operationally, the returns fell far short of expectations. Customer feedback echoed our frustrations, and we knew it was time for a change.

This is why we strongly recommend that you switch your v1 buckets to the new v2 service, as these issues do not exist there, and you will benefit greatly from it.

What

Given the persistent issues with the v1 S3 product, the need for an alternative was evident early on. Learning from past mistakes, we conducted thorough testing this time around, evaluating not just performance but also the ease of integration with our existing self-service API.

After considering a range of options, we settled on Nutanix Objects as the way forward. As we already rely on Nutanix for virtualisation for a variety of our services, such as NKE, we could leverage our existing infrastructure and familiarity with Nutanix solutions. It was a natural fit for our evolving needs.

How

Implementing the v2 product into our existing API worked like a charm. The only difference on the Nine API was that you needed to set “backendVersion” to “v2”.

Evidently, the most difficult challenge when replacing a product like this is data migration. We wanted to migrate to v2 fast and leave v1 behind, but we did not want to leave our customers with all the work of migrating their data themselves. So we decided to implement an automatic way of syncing data from the old to the new buckets.

The Nine API for Bucket Migration is quite simple. You just need to set the following parameters:

- A source v1 bucket and a user with read permission

- A destination v2 bucket and a user with write permission

- An interval which decides how often the sync should run

- A configuration that defines whether data that exists in the destination bucket should be overwritten or not

Behind the scenes, we utilise rclone, a highly recommended tool for S3 bucket management. It offers two essential commands: sync and copy.

Sync: This command synchronises the destination with the source, updating files accordingly. Additionally, it removes any files from the destination that are not present in the source.

Copy: In contrast, the copy command duplicates data from the source to the destination without deleting anything in the destination.

rclone sync source:sourcepath dest:destpath

rclone copy source:sourcepath dest:destpath

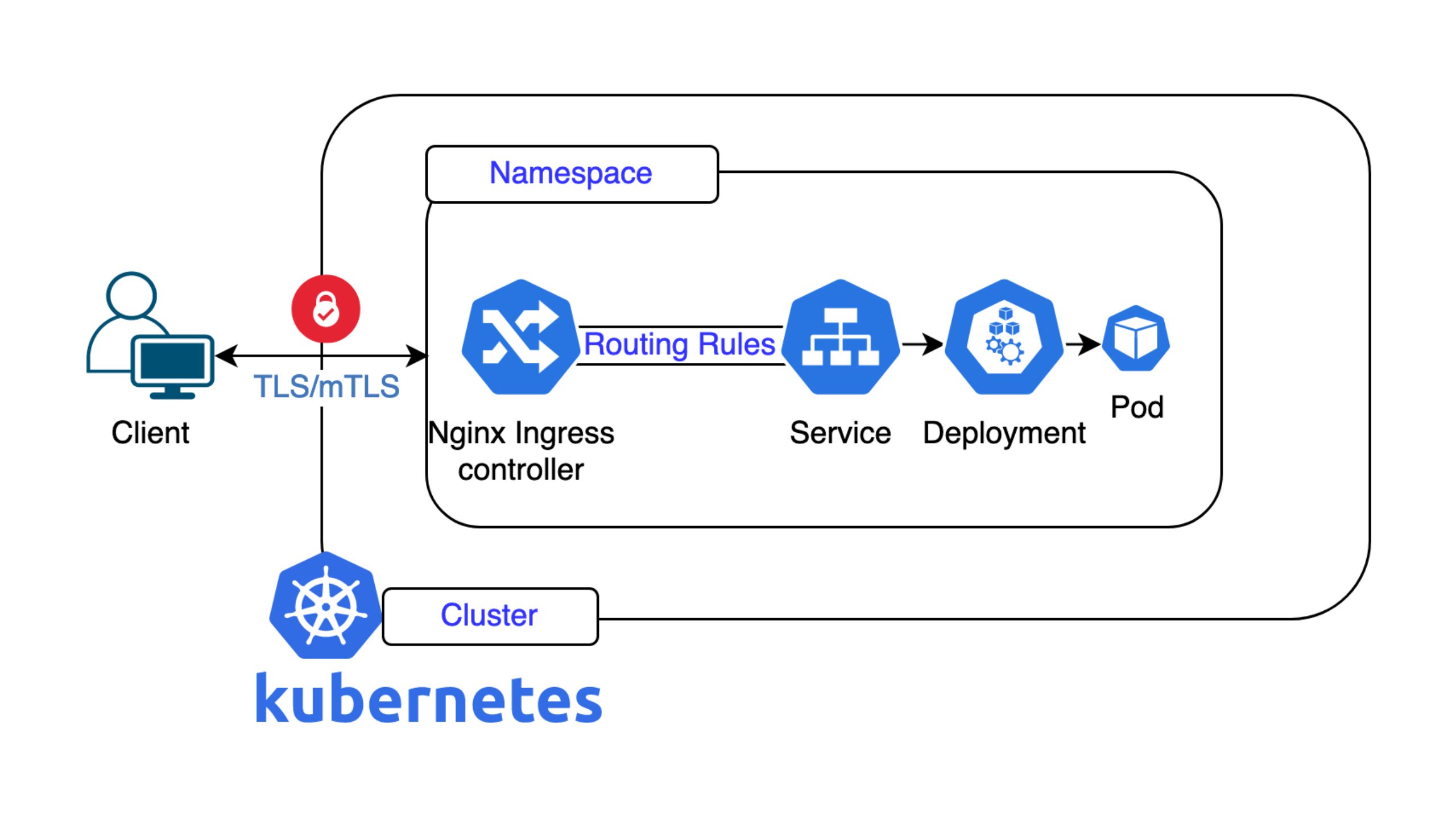

The rclone commands are executed as pods within our Kubernetes infrastructure.

apiVersion: batch/v1

kind: CronJob

metadata:

name: bucketmigration

namespace: myns

spec:

jobTemplate:

spec:

parallelism: 1 schedule: 7,22,37,52 * * * *

spec:

containers:

- args:

- copy

- source:<bucketname>

- dest:<bucketname>

command:

- rclone

image: docker.io/rclone/rclone:1.65.2

name: rclone

The aforementioned CronJob definition initiates a job that launches the rclone pod responsible for managing the migration process.

Now that data can seamlessly migrate to the new v2 buckets, let’s discuss how to update applications to utilise them.

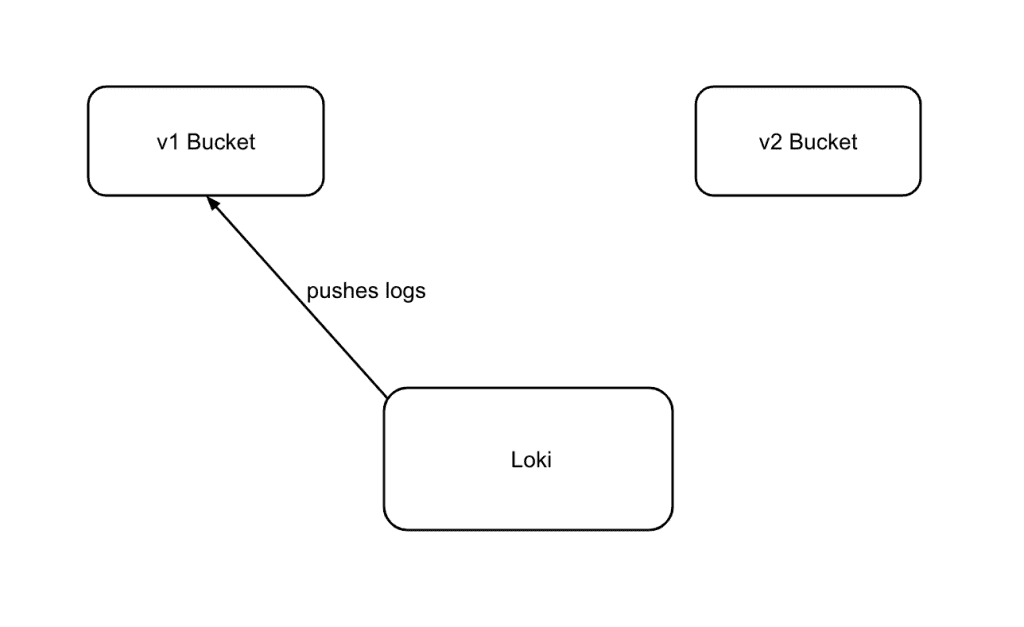

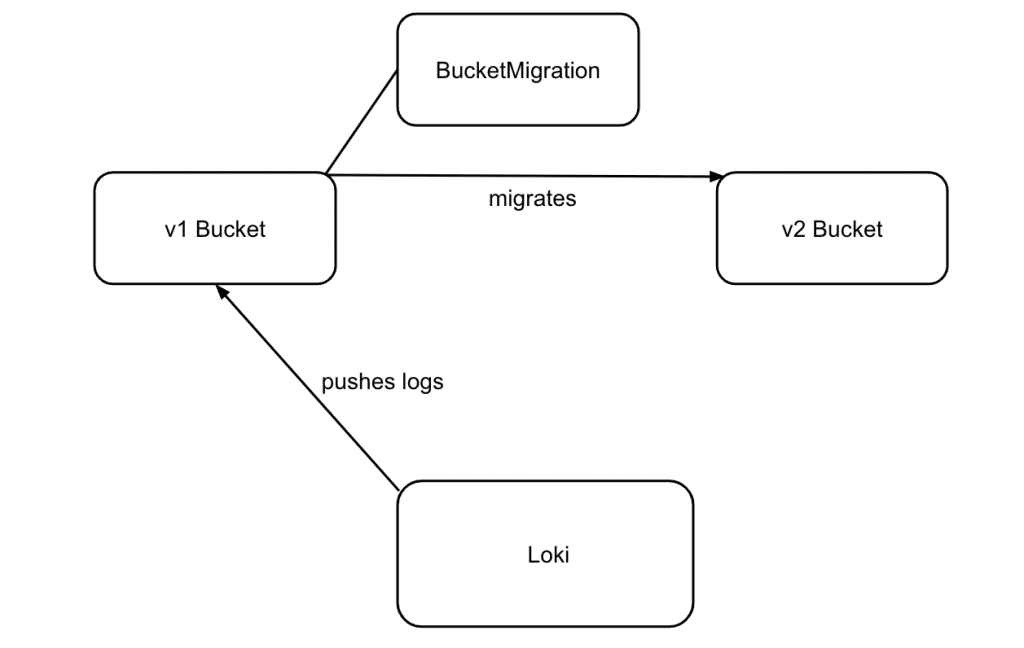

For instance, let’s consider Loki, a Nine-managed service storing logs on S3. We’ve prepared a v2 bucket for use. It’s worth noting that, for all internal migrations, we’ve configured the “Delete extraneous Objects in Destination” setting to false, as there’s currently no data being written to the v2 Bucket.

We create a BucketMigration in the Nine API that will sync the data to v2.

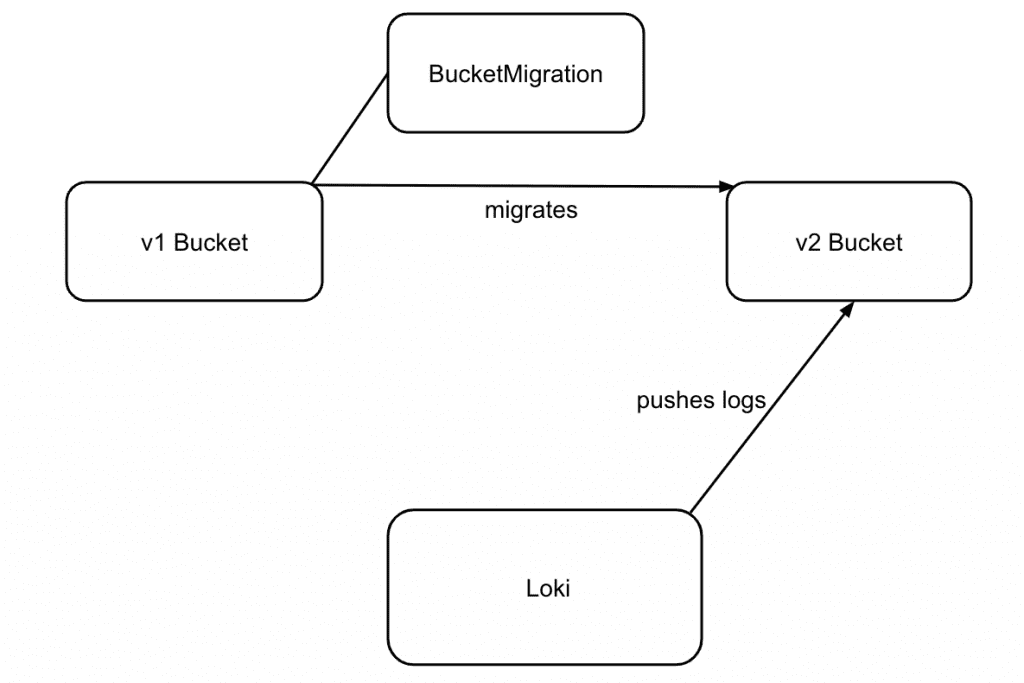

After an initial run, we can now switch Loki to push to the v2 bucket.

After we have switched to v2, we let another migration run take place, to sync any data that was in v1 but not in v2. And we are done.

Was It worth It

Reflecting on such a substantial change, it is essential to evaluate whether it has paid dividends. In this case, the answer is a resounding yes.

The v2 S3 product has delivered a remarkable boost in performance across both buckets and associated services, with a decrease of 56% in the time it takes to push an object. Our tests now pass at a significantly accelerated rate, and our production environment has experienced a marked improvement in performance.

Moreover, the migration has brought about a substantial cost reduction, with prices plummeting by 66%, from 0.09 CHF to 0.03 CHF.

This means not only a faster and more efficient product, but also a more cost-effective one. It’s a win-win scenario, and we couldn’t be more delighted with the outcome.

A look into the future

Currently, the BucketMigration feature is limited to syncing data exclusively between v1 and v2 buckets. However, in the near future, we plan to expand its functionality to support v2 to v2 synchronisation as well, as this capability is already technically feasible. Additionally, we’re exploring the possibility of broadening the migration scope to include external buckets, such as those from Google Cloud Storage (GCS) or Amazon S3. This expansion would enable you to leverage Nine’s S3 product for purposes such as off-site backup solutions.

And, as always, we are open to hearing your suggestions, needs and further ideas for our products, and if you are planning a bucket migration or have questions regarding migration details, please do not hesitate to get in touch.