We don’t just manage your Kubernetes cluster, we provide a fully maintained open-source software stack…

Peak Privacy

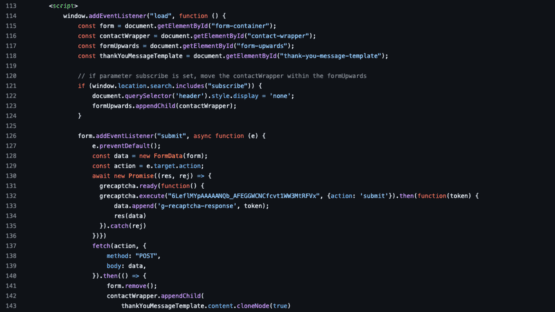

We supported Peak Privacy in designing and building a specialized server infrastructure for LLM inference. The process was characterized by close collaboration, including intensive idea exchange and joint problem-solving, where the specific expertise of both partners was used optimally to align the hardware precisely with the software requirements.

Products Utilised

The Challenge

The Solution

The Result

«Working with Nine was a real stroke of luck. We finally found a partner in Switzerland who not only understands our specific needs for LLM inference but also has the technical expertise to build a perfectly tailored, high-performance, and cost-attractive server solution. It was a game changer for us.»